Although humans can read scanned pages (just as we do paper pages), a computer understands these pages as no more than image files with black and white areas. In order to search, index, analyze, or rearrange these books, we need to provide the computer with electronic text as well as page images.

Like most things, this text can either be generated by hand or by machine. The automated creation of electronic text is generally known as optical character recognition (OCR). In this process, software attempts to “read” the page images and map each chunk of black pixels it sees to the correct character or symbol. OCR technology is used in large scale digitization projects like Google Books and HathiTrust, as well as in common software such as Adobe Acrobat. The full-text search functions that are offered in the ECCO and Evans databases rely on OCR technology.

OCR software is improving all the time. It works very well on modern books, but the older the book, the more the software struggles. For books printed before 1700, and for images that are blurry, spotty, or have other quality issues, it fails almost entirely, which is why the stand-alone EEBO database doesn’t include any searchable electronic text at all.

That’s where the TCP comes in: we work with vendors who manually key in the letters they see on the page. Each page is transcribed by more than one person, and the results are compared against one another, to generate electronic text that is 99.995% accurate. Keying is the greatest expense in the TCP’s budget. However, done by a person trained to identify the features of early modern texts, it is actually more cost effective than sorting through and correcting poor-quality OCR.

Why OCR Won’t Work

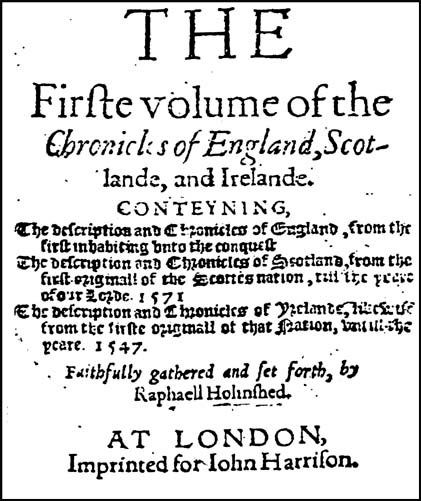

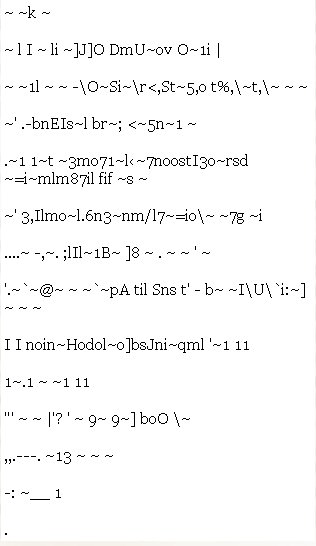

Here is an example of a scanned page from EEBO, followed by the same page as read by typical OCR software:

Even when OCR works well, it is usually hidden from the view of the user and used only for indexing/searching, because it contains enough errors to be distracting to the reader. An added benefit of the TCP’s manually keyed text is that it is clean enough to be displayed. Therefore, in addition to supporting full text searching, the TCP offers modern-type transcriptions of these texts.

Why we need human editors

The calculation of error rates and the incremental improvements to OCR through training, AI, and neural networks are complex matters that cannot be covered here. We would note only these few points, which informed our decisions:

- By “accuracy” and (its inverse) “error rate,” we mean the character-level accuracy and error rate, basically, the percentage of correctly recognized characters in the transcription vs. the number of characters present in the source. This can be complicated by many subjective factors, including characters missing in the source, or typos or other printing defects in the original, and the question of whether spacing, capitalization and punctuation errors have the same value as errors in recognizing characters. TCP over the years developed a set of policies that tried to take account of these complexities, and that tried to strike a balance between maximum capture and maximum accuracy, e.g. by encouraging creative “guessing” at damaged characters and penalizing over-reliance on the “illegible” flag. Those interested may want to consult a brief summary of the TCP policies, with examples.

- The best trained OCR, when applied to historical scripts, may achieve accuracy results in the 90s — as high as 95%. That sounds high, until you realize that if one character in ten or twenty is wrong, then the word-level accuracy rate may approach zero, i.e. every word or every other word may well contain an error.

- The images from which TCP works — most of them 1-bit (black and white) images scanned from microfilm — can present real challenges, even to human transcribers. In this example, our keyers did pretty well:

- Text often appears intermingled with other elements (graphics, for example) that puzzle OCR, and require a human transcriber not only to capture their words but to capture their relationship with the surrounding material.

- Finally the very notion of “character” (or “letter” and “number”) is often ambiguous. The glyph that we normally call “z”, for example, by our calculation can represent up to 14 different characters and abbreviated character strings, and it is up to a human editor to distinguish these. Only the fourth of these six examples actually contains “z” (as part of an abbreviation for “semis” = “half”); the others are a form of -m, the trailing half of the brevigraph for -que, the generic ‘et’ abbreviation stroke (here abbreviating “scilicet”), the symbol for “dram”, and the Middle English and early Scots character “yogh” (here with the value “y-” in the word “you”).